By Mike Schwartz, Founding Partner at ORIGYN Foundation

Following our enjoyable Twitter Spaces AMA this week (https://bit.ly/3v2vICQ), and the fantastic questions from our audience, it was interesting to note several inquiries related to ORIGYN’s tech and what we’ve accomplished with mobile phones, in particular. So, I thought I’d take a moment to elaborate on this topic and share several exciting updates about our ongoing research and development in this area.

ORIGYN embodies a broad range of technical R&D — from decentralized infrastructure; dApp development; computer vision, artificial intelligence and machine learning; to robotics and IoT. These developments underpin many upcoming new product releases designed to deliver value in significant ways, but it’s true our mobile phone-based R&D remains particularly exciting.

It was more than a year ago that ORIGYN first delivered a live demonstration to multiple gatherings of CXOs and other executives. Collectively, this group represented a slew of the world’s most important luxury and watchmaking companies, physically cycling through one technically decked out room in Switzerland over three days.

This demonstration involved the minting of luxury watch “Digital Twins” followed by the matching of these twins back to the original artifacts. We achieved this with 100% accuracy using — yes, you guessed it — a mobile phone to accomplish the match. This included live evidence of database cleansing, scrolling code execution in real-time on-screen, as well as randomization devices to ensure the matching sequence wasn’t pre-loaded.

It was a sight to behold and an exciting event for us all, drawing multiple rounds of applause and audible evidence that we were all witnessing a breakthrough moment. Against this backdrop, we remained extremely open about what we had managed to achieve, and what we were still seeking to advance via this facet of our overarching technical R&D. Our team has since been focused on two key areas of improvement in this domain.

The first of these is usability. We need to ensure that any such mobile phone-based user experience is a truly great one. We must enable ease of use, minimal duration to successful user outcome and even the joy of novelty of use in order to meet the high standards ORIGYN seeks to uphold.

This is non-trivial and involves multiple layers of technology, including region of interest templating; interrogating blur detection and auto-focus; multi-frame auto-capture; variable and pre-set zooming; and filter use for glare control and other feature-highlighting techniques; to name a few.

Other inputs include optimized image stabilization and experiments with contrast, aperture, exposure and ISO. Much of this might require native phone camera app & API access. For the latest and most capable phones, this might involve beta-only access; early and at times clunky SDK access; or outright hacking experiments.

However, despite all these options, considerations and challenges, our work continues to bear fruit as every new month unfolds and 3rd party technology advancements pile onto the advancement curve.

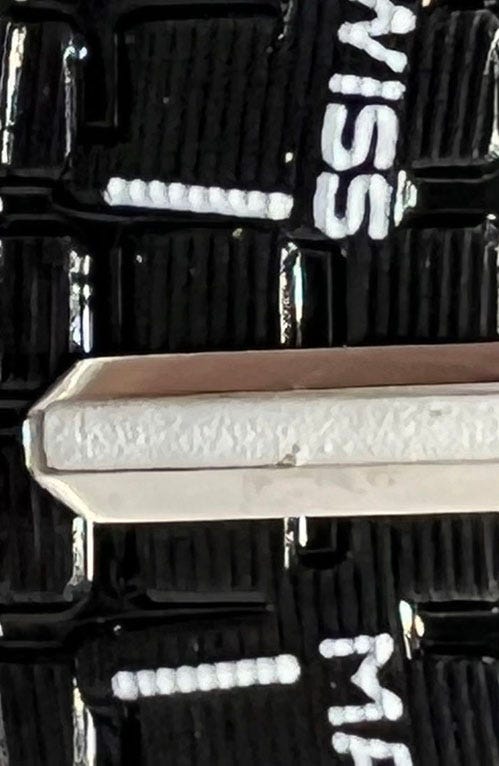

For example, here is an image I took using a hand-held iPhone 13 leveraging its macro lens. Grab one and try it yourself. It’s very cool! The world is changing fast in this domain, and the possibilities are becoming truly extraordinary.

The other area of focus for ORIGYN’s R&D engine in this technical domain relates to scalability. As we seek to ensure we can deliver high levels of matching confidence across even very large populations within a single SKU, we need to extend our region-of-interest maps (per SKU).

We want to be able to do this programmatically, not just manually, as we onboard myriad new larger population SKUs across multiple asset classes. This, we believe, we already know how to do. It simply takes work, and time, to do it.

Finally, our R&D team has unearthed some very cool matching tricks that we believe can help ensure components WITHIN items can also be certified as authentic for large populations of items like luxury watches (e.g., watch movements, not just those components externally visible). And that is fantastic.

So, there you have it. The truth. ORIGYN can match. It has shown it can match using a cell phone with 100% accuracy: live, physically on-site and for the most knowledgeable of audiences. Our current caveats and foci are clear, and we’re genuinely excited about the progress the collective global talent pool we draw upon keeps making on this.

As we further advance our work relating to user experience and scalability, we hope you too can enjoy using this incredible set of tools in ways that make your own world better, safer, easier and fairer, and indeed for all.

Perhaps you might also choose to be part of this in much bigger ways, including by helping us all govern this shared asset and improve it for the true creatives, value creators and bona fide owners all over the world.

In the meantime, you will see the fruits of our R&D relating to other game-changing products and services coming to market over the next handful of months. Rest assured that if ORIGYN is confident enough to guarantee its certifications, then we’ve obviously arrived at a place where the math tells us our techniques are very sound. And that’s what matters most.

Stay tuned and enjoy the ride.

To hear more updates on the product and technology development at ORIGYN Foundation, follow us on Twitter and Telegram. Listen to the AMA at: https://bit.ly/3v2vICQ.